Imagine this: You’re in a high-stakes race, but instead of sprinting flat-out to the finish, you deliberately slow down to conserve energy for the long haul. Sounds counterintuitive? Welcome to the hidden logic of success in a constrained world. From the tiniest cells in your body to cutting-edge AI labs and even cryptocurrency networks, systems aren’t chasing raw speed or peak efficiency. They’re playing a smarter game: optimizing for survival. This isn’t about failure—it’s about strategic sacrifice. And as fresh research from UCLA and Stanford reveals, this trade-off is reshaping how we understand aging, innovation, and resilience.

Cells Don’t Fail—They Adapt

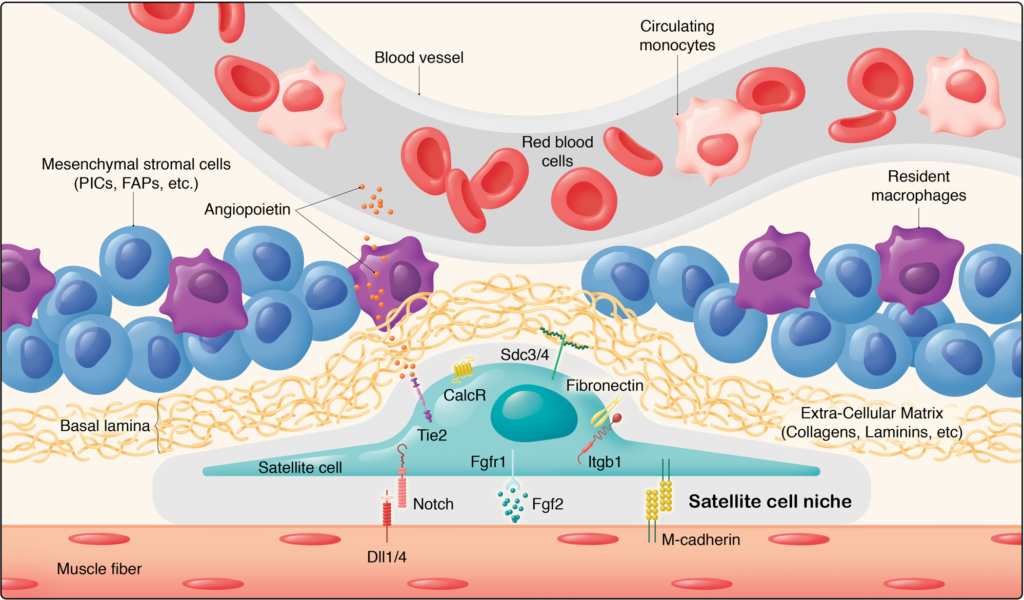

Picture your muscles as a battlefield where stem cells are the elite troops, constantly repairing damage from workouts, injuries, or just the grind of life. But as we age, these cells don’t simply wear out like old tires. A groundbreaking study published in Science flips the script on aging. Researchers at UCLA’s Eli and Edythe Broad Center of Regenerative Medicine discovered that aging muscle stem cells (MuSCs) make a calculated choice: they dial back their repair speed to boost longevity under stress.

Here’s how it works. In older mice, these cells ramp up levels of a protein called NDRG1—about 3.5 times higher than in young cells. NDRG1 puts the brakes on mTOR, the cellular pathway that drives rapid repair and growth. The result? Slower muscle regeneration, but the cells hang in there longer, preserving the stem cell pool for future battles. Block NDRG1, and repair speeds up dramatically—but under repeated stress, those cells crash and burn faster.

This isn’t decay; it’s a form of “cellular survivorship bias,” where only the toughest, most resilient cells stick around. As the study shows, genetic tweaks to remove NDRG1 improve short-term regeneration but compromise long-term survival, especially after multiple injuries. Senior author Dr. Thomas A. Rando compares it to sprinters versus marathon runners: young cells excel at quick bursts but falter over time, while aged cells prioritize endurance.

But honesty check: This was tested in mouse models, so human applications are still emerging. No miracle anti-aging pill here yet, just a profound rethink of what “getting old” really means.

(Imagine side-by-side images here: Left shows slower regeneration in aged muscle with normal NDRG1; right shows faster but more fragile repair when NDRG1 is blocked. Credit: Jengmin Kang/Rando Lab)

AI Hits the Same Wall

Now, zoom out from biology to the buzzing labs of AI research. A recent paper on arXiv explores systems that automate AI research itself—LLMs dreaming up new ideas, coding them, running experiments on GPUs, and iterating based on results. Sounds like sci-fi? It’s real, but it slams into the same constraint wall as those aging cells.

The setup: In one environment, the system tweaks pre-training methods for models like nanoGPT to slash training time. In another, it refines post-training techniques for math reasoning. Frontier LLMs generate ideas, which get implemented and tested automatically—with high success rates in execution.

The hitch? Traditional reinforcement learning (RL) optimization converges too quickly on safe, simple ideas, leading to “mode collapse”—the AI loses its exploratory spark. Solution: Evolutionary search, which mimics natural selection to balance exploration and exploitation. Results? Significantly better performance in targeted tasks (e.g., higher accuracy and faster training times), but the system still saturates early on true breakthroughs, sticking to narrow domains.

The insight: Execution feedback and evolutionary methods help avoid collapse, but rapid exploitation trades off deeper exploration. Just like cells sacrificing speed for survival, AI trades quick wins for thorough, resilient search. Limitations abound—human experts still outperform in some areas, and scaling to true novelty remains elusive. Yet, it’s a step toward more autonomous, self-improving AI.

The Pattern Everywhere

This isn’t coincidence; it’s a universal blueprint. In biology, cells choose endurance. In AI, algorithms favor resilience over rapid convergence. Look around, and the pattern pops up like a fractal:

- Bitcoin: Self-custody means ditching convenient exchanges for cold wallets. You lose ease, but gain unbreakable immunity from hacks or seizures. Peak convenience? Fragile. Survivorship? Ironclad.

- Open-Source Software: It trades slick, polished interfaces for raw robustness. Bugs get fixed by the crowd, not a central team—slower, but antifragile against corporate shutdowns.

- Mesh Networks: Forget efficient, centralized Wi-Fi hubs. These decentralized webs sacrifice speed for independence, thriving in disasters where traditional grids fail.

- Infrastructure: High-bandwidth memory (HBM) chips are monopolized for efficiency, but decentralized designs optimize for supply chain resilience. Even SpaceX’s orbital data centers trade ground-based convenience for cosmic redundancy.

Everywhere, under real-world constraints—scarcity, attacks, uncertainty—systems ditch peak performance for something tougher: adaptability.

Why This Matters

We’ve been sold a myth: Faster is always better. Efficiency reigns supreme. But in a volatile world—pandemics, cyber threats, economic shifts—that mindset breeds brittleness. These discoveries expose the flaw: Peak optimization shines in stable bubbles but shatters under pressure.

Aging cells teach us why “anti-aging” hacks might backfire, trading short gains for long-term crashes. AI’s struggles explain why full autonomy is still a horizon away—it needs that external nudge to avoid collapsing into mediocrity. Decentralized systems “lose” on metrics like speed but win wars of attrition. Institutions ignore this at their peril; they’re built for calm seas, not the storms we’re sailing into.

The Real Question

Systems don’t crumble—they evolve. Watching cells, code, and cryptos all echo the same choice, one thing’s clear: A fundamental shift is underway. The fastest don’t always finish first; the survivors do.

So, what are you optimizing for? Peak glory, or unbreakable grit?

Sources and Credits

UCLA Muscle Stem Cell Study

- Title: “Cellular survivorship bias as a mechanistic driver of muscle stem cell aging”

- Authors: Jengmin Kang, Daniel Benjamin, Qiqi Guo, Gurkamal Dhaliwal, Richard Lam, Thomas A. Rando, et al.

- Journal: Science, Vol. 391, Issue 6784, pp. 517-521 (published January 29, 2026)

- DOI: 10.1126/science.ads9175

- Lead Institution: Eli and Edythe Broad Center of Regenerative Medicine and Stem Cell Research at UCLA

- Senior Author: Dr. Thomas A. Rando

- Image Credit: Muscle tissue microscopy images courtesy of Jengmin Kang/Rando Lab (used for illustrative purposes in visual render)

AI Research Paper

- Title: “Towards Execution-Grounded Automated AI Research”

- Authors: Chenglei Si, Zitong Yang, Yejin Choi, Emmanuel Candès, Diyi Yang, Tatsunori Hashimoto

- Preprint: arXiv:2601.14525 [cs.CL] (submitted January 20, 2026)

- Link: https://arxiv.org/abs/2601.14525

This article draws on publicly available research summaries, press releases from UCLA, and the original papers/preprints. All interpretations and broader pattern recognition are the author’s synthesis and not direct quotes unless attributed.